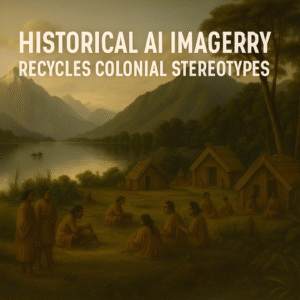

New research from the University of Waikato shows that generative AI tools are unintentionally reproducing colonial-era stereotypes when asked to create historical images of Aotearoa New Zealand.

New research from the University of Waikato shows that generative AI tools are unintentionally reproducing colonial-era stereotypes when asked to create historical images of Aotearoa New Zealand.

When prompted for scenes from the 1700s to 1860s, the images often depict empty wilderness, European settlers in heroic roles, and Māori pushed to the margins. The problem runs deep: most training datasets rely on colonial archives that already frame Indigenous people and lands through a European lens.

Big ideas:

-

Inherited bias – AI models replicate the bias embedded in their data sources.

-

Cultural distortion – These outputs reshape public memory and historical understanding.

-

Responsible use – Authentic imagery demands local datasets and cultural collaboration.

∴

Every AI-generated image tells a story. The question is, whose story is it telling?

(Yes this image was generated by AI)